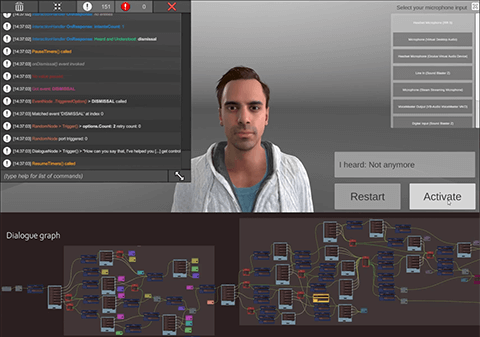

The acting is by Shannon Tracey: https://www.youtube.com/watch?v=nBXPDnD4gyc I've used the audio to the YouTube clip and passed it through AccuLips, then pointed my iPhone to the PC monitor while the video is playing and used Motion LIVE's Live Face to capture the rest of the face movements.

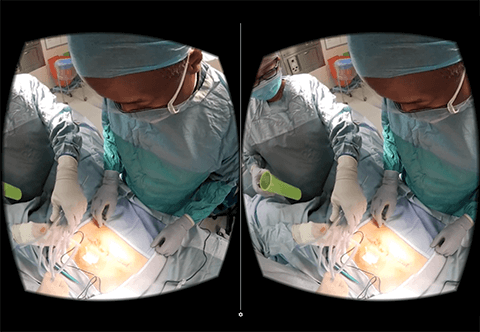

My YouTube channel contains a lot of walkthrough videos explaining my workflow as I create, rig and optimise these characters for use in Unity VR projects.

Project Description

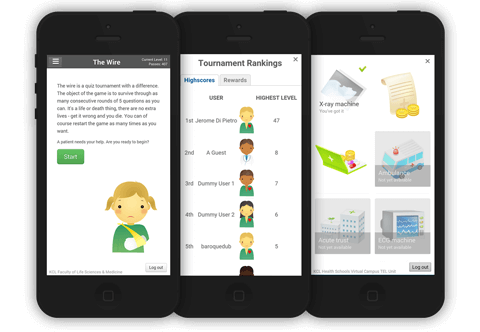

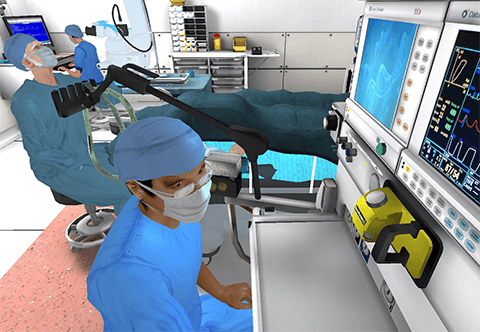

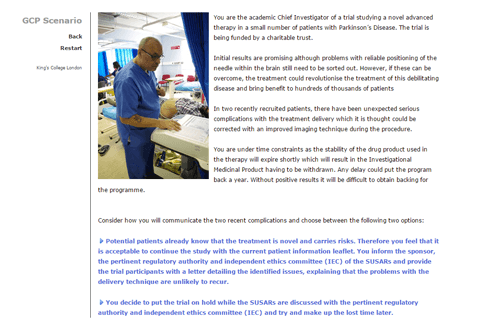

A big part of the work I do in the VRLab is based around social interactions with non-player characters. These digital humans need to tread a careful balance between the uncanny valley and a realism compelling enough to evoke real emotions in the player participant.

As of May 2021, Epic's MetaHumans are gaining a lot of traction for their expressive facial rigs and photorealistic shaders. On the downside, their body shapes are very simplistic and formulaic, and the choice of clothes is currently limited to two or three outfits. In the work we do, it's important for us to have avatars that represent the diversity of the South London demographic our patients live in.

Originally using Adobe Fuse for character creation, then trialling a number of other platforms such as Make Human, I am currently using Reallusion's Character Creator 3 and iClone to author a wide range of digital humans that breathe life into our VR experiences. Although it can be buggy, the recent update which brought ARKit compatible ExpressionPlus blendshapes and AcuLips lipsync animation has definetely made it a tool worth persevering with.

Software used: Character Creator 3 / iClone / Unity.

Project Details

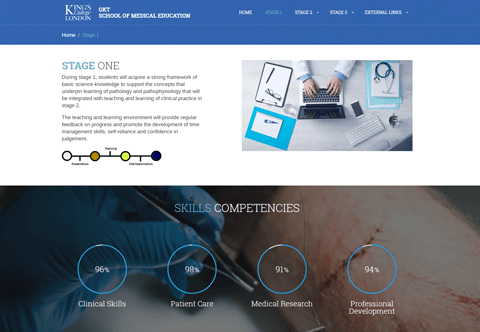

- Client: KCL IoPPN Psychology VRLab

- Status: Ongoing

- Themes: Simulation, AI, VR, Virtual Patients

- Team: Jerome Di Pietro