Project Description

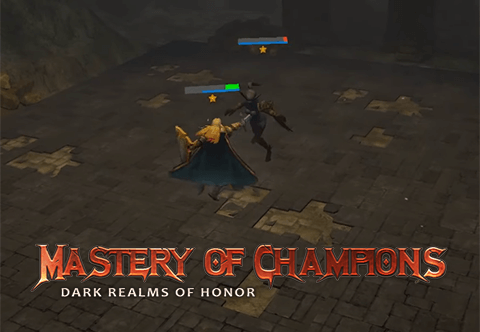

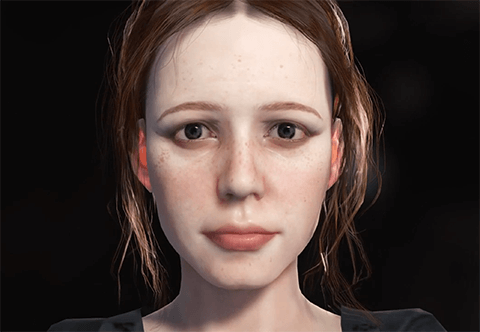

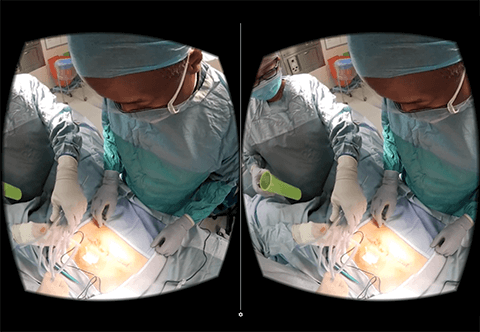

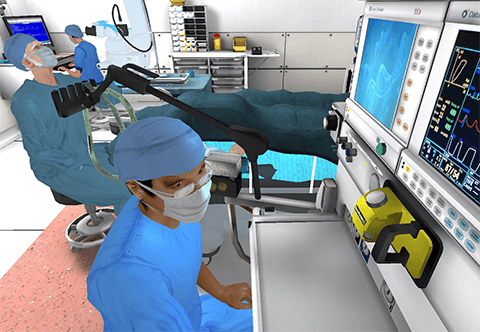

I recently revisited the area of voice activated AI powered conversational agents, using technology that simply wasn't available when I last tried this 3 years ago. The Oculus VoiceSDK, sends microphone audio to Wit.ai which then handles both Automatic Voice Recognition (ASR, i.e. speech-to-text) and Natural Language Processing (NLP). Wit then sends back responses as 'Intents', 'Entities' and 'Traits' which are then used as triggers in a branching conversationa graph. Depending on the intents received (e.g. a dismissal like "go away", or an assertion like "I don't need you") the graph nodes trigger different voice audio and facial mocap animation clips on the digital human.

This is a proof of concept prototype for an app we're hopefully getting funding for. It builds on the work of the AVATAR Therapy project in which patients who suffer from distressing auditory hallucinations are allowed a face-to-face dialogue with their inner voices. The goal is to design a Conversational Agent that will take on the role currently played by a live therapist

https://www.kcl.ac.uk/research/avatar2

Software used: Unity / xNode / Character Creator.

Project Details

- Client: KCL IoPPN Psychology VRLab

- Status: Ongoing

- Themes: Simulation, VR

- Team: Jerome Di Pietro